Are you curious about SnowSQL? Then, let’s dedicate this new post of What’s under the Snowflake(s)? of it! We focus on the challenge of Week 23 – Basic from Frosty Friday.

“SnowSQL is the command line client for connecting to Snowflake to execute SQL queries and perform all DDL and DML operations, including loading data into and unloading data out of database tables.” (https://docs.snowflake.com/en/user-guide/snowsql.html). This week, we’re going to ask you to use it for one of the features that it’s best known for however: the Bulk loading feature. You’ve been asked to upload some files into a table and the Sales Manager said that “it’ll only take you about 2 minutes”. So we’re pretty clear that it’ll take us at least 15.

Frosty Friday ‘Week 23 – Basic’

The challenge consists of :

How to proceed? A possible strategy!

We proceed step by step in order to guide the reader through a possible resolution strategy

- P1: Download the zip file containing the CSV files

- P2: Download and install SnowSQL on your machine

- P3: Connect to your Snowflake instance with SnowSQL

- P4: Bulk load the files ending with a ‘1’

- P5: Skip any erroneous file.

P1 & P2: Download the zip file and SnowSQL

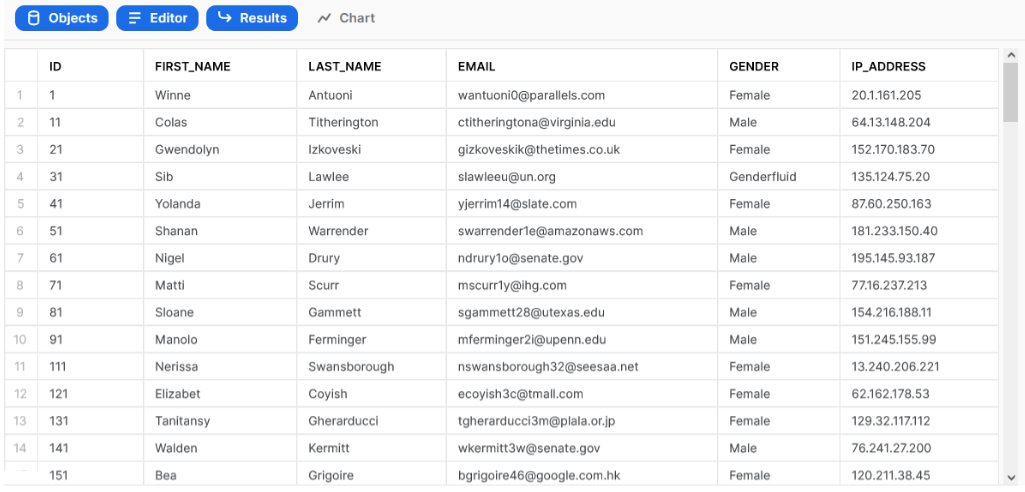

After having downloaded the file, it is better to focus a little bit on the kind of data or tables that are in your folder. In this way it will be easier to build the desired ending table. Here, it can be useful to see the picture attached to the challenge Week 23.

Hence, it follows quite natural to write that:

-- Create your stage

CREATE OR REPLACE TABLE THIS_IS_YOUR_STAGE (

id VARCHAR

, first_name VARCHAR

, last_name VARCHAR

, email VARCAHR

, gender VARCHAR

, ip_address VARCHAR);P3: Connect to your Snowflake instance with SnowSQL

At this point we need to open the terminal and use SNOWSQL.

snowsql -a <your_account> -u <your_username>We need to upload the set of files into the stage below. But if you want a more clear and precise recapitulation about how uploading data work… then you have to read this blog!

Type SQL statements or !help

<your_username>#(no warehouse)@(no database).(no schema)>USE ROLE <your_role>;

1 Row(s) produced. Time Elapsed: 0.088s

YOUR_ROLE#(no warehouse)@(no database).(no schema)>USE WAREHOUSE

<your_warehouse>;

...

YOUR_ROLE#YOUR_WAREHOUSE@YOUR_DATABASE.YOUR_SCHEMAP4: Bulk load the files ending with a ‘1’

PUT 'FILE://C:/My/Path/To/Filestoingest/data_batch_*1.csv' @YOUR_DATABASE.YOUR_SCHEMA.THIS_IS_YOUR_STAGE;

If you want to be sure that you there is something into your stage, you can check it with:

-- View files in stage

list @THIS_IS_YOUR_STAGE;Back in your Skowflake’s worksheet. Here you can create a FILE_FORMAT. It describes a set of settings to access or load into Snowflake tables.

-- To set the formatting settings

CREATE FILE FORMAT your_file_format_setting

type = CSV

field_delimiter = ','

record_delimiter = '\n'

field_optionally_enclosed_by = '"'

escape = '\\'

skip_header = 1;Now, you can copy all the data into the TABLE. You can use the command COPY INTO and you can decide how to ingest the data. As a matter of fact, we have created a FILE FORMAT that we can insert into the COPY INTO. In order to skip any erroneous file, you need to specify some copy options, i.e. ON_ERROR.

P5: Skip any erroneous file.

--To copy and format your data

COPY INTO THIS_IS_YOUR_STAGE

FROM @THIS_IS_YOUR_STAGE

FILE_FORMAT = (format_name = 'your_file_format_setting')

ON_ERROR = SKIP_FILE;ON_ERROR can be used only for data loading. It specifies the error handling for the load operation. Since it can have different values, it is very important to choose the right one. In this case, SKIP_FILE. The command is self explanatory.

At this point we just have to… SELECT * from our THIS_IS_OUR_STAGE table and …