When you are developing a DBT project, it’s usual to create, at least, two critical environments: Development and Production. This blog post will delve into the nuances of these environments in DBT with Snowflake. Therefore, we’ll explore the essential differences, purposes, and roles of Development and Production environments, highlighting their importance in the realm of data engineering.

What are Development and Production Environments?

Understanding how to effectively manage these environments is crucial for maintaining efficiency, accuracy, and security in your data projects, whether you’re an experienced data professional or just starting in the field. However, we first must define what Development and Production environments are.

Development

Significantly, the Development environment, as detailed in our table, is characterized by its role as a dynamic testing ground. Here, data professionals have the freedom to innovate, test, and refine new features, code, and data models in an isolated setting, ensuring that any changes do not disrupt the stable Production environment. Therefore, this isolation is key to maintaining the flexibility and adaptability that are hallmarks of the Development phase.

Production

On the other hand, the Production environment represents the culmination of all tested and refined work from the Development stage. It’s the definitive platform where we deploy code and data models for final use. Stability, performance, and security are the cornerstones of the Production environment. It is optimized for reliability and scalability, with stringent access controls and security measures firmly in place to safeguard data integrity and operational continuity.

| DEVELOPMENT | PRODUCTION | |

| DEFINITION AND PURPOSE | – A space for testing and experimentation. – Used for developing new features, code, and data models. | – The stable, live environment for final use. – Hosts fully tested and approved code and data models. |

| CHARACTERISTICS | – Flexible and adaptable setup. – Isolated from production data to prevent disruptions. – Focused on innovation, testing, and iterative improvements. | – Emphasizes stability and reliability. – Optimized for performance and scalability. – Can create automatically JOBS. |

Implementing Development and Production in DBT within Snowflake

Implementing effective Development and Production roles and objects in Snowflake is a critical step for managing your DBT projects efficiently. This setup involves creating specific structures and assigning appropriate privileges for each environment, ensuring seamless access and operational security. Here’s how you can approach it:

- Creating Structures for Each Environment: Firstly, establish separate warehouses and databases for Development (

DEV_DBT_WH) and Production (PROD_DBT_WH). This division provides a clear distinction between testing and live environments and the compute resources of each one. Next we will create a Database for each one, so that DBT is capable to manage and store the information independently. - Defining and Assigning Roles: After that, define unique roles for each environment –

DEV_DBT_ROLEfor Development andPROD_DBT_ROLEfor Production. These roles help in managing access controls specific to their respective environments. - Granting Privileges: For each role, grant the necessary privileges. Therefore, what we will need for each of the roles is:

- Acquire the privileges of a SYSADMIN.

- USAGE and OPERATE privileges for their respective Warehouse.

- USAGE privileges and the ability to create Schemas in their respective database.

- Privileges for using existing schemas and all future schemas in their respective database.

- Access to Raw Data: Furthermore, both roles should have access to raw data, essential for consistent data processing and analysis across both environments. However, it’s crucial to manage this access carefully to prevent unintended modifications or breaches in data security, then, we only grant the SELECT privilege on this tables to the both roles.

- User Creation: Creates an user for each envirnoment and assign its role, this user will be used for the DBT interface to connect with Snowflake.

Remember, a clear understanding of the roles and privileges in Snowflake is key to implementing these environments effectively. For a detailed understanding of DBT, I recommend checking out the Advanced Deployment Course on DBT, which will complement your Snowflake setup perfectly.

Where to use this USERS in DBT?

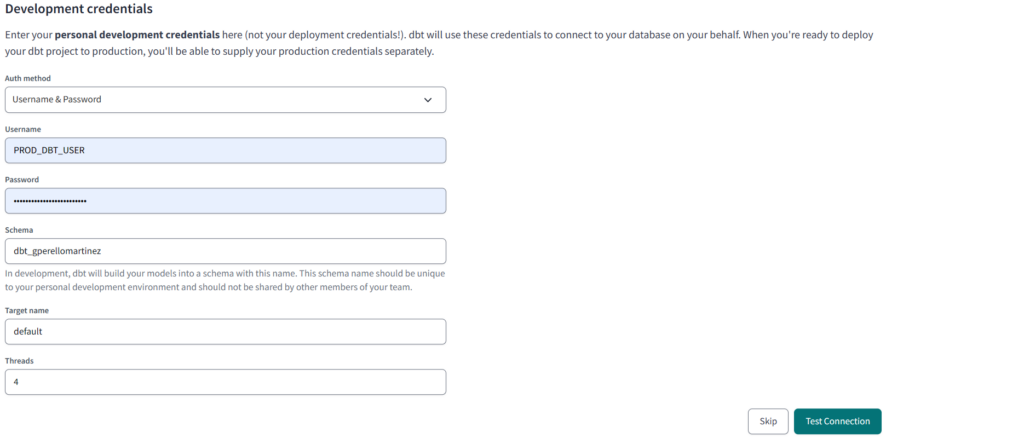

When we have all the structure created with one user for Development and one user for Production, we must assign each user to each environment in DBT. This is achieved by creating an Environment in the “Development Credentials” section, as shown in the image. We need to enter our user credentials according to the type of Environment we are creating. Then, when we click on test connection, we will have a test to confirm that we can connect to Snowflake.

From now on, whenever we are in Development (and therefore editing DBT code), all models will be loaded into the Development Snowflake database. Conversely, for all the JOBS we create for our Production environment, all models will be loaded into the Production Snowflake database.