In the previous chapters of this series, we have seen how to load through staging data from foreign sources to your Snowflake account.

But what could we do if we plan to incorporate data continously in our Snowflake environment?

Let’s talk about Snowflake Snowpipe!

What is a Snowpipe?

A Snowpipe is a continuous data ingestion service offered by Snowflake that enables loading data from files as soon as they’re available in a stage.

In other words, it is a simplified method that prevents the user from having to feed manually to the tables frequently updated data from files in micro-batches.

Even though Snowflake supports loading data from any of the three cloud storage service providers (i.e. Amazon S3, Microsoft Azure Blob, and Google Cloud Storage) irrespective of the cloud platform, to set up a pipe it is necessary that your data is saved on a platform of the same kind as your cloud provider.

To express it better with an example: if the data is stored on an Amazon S3 bucket, your Snowflake account should run on AWS Services in order to set up a pipe.

Practically, a pipe is a named, first class Snowflake object that carries a COPY INTO statement. The command detects the source location of the target table and data files. As dependent on a stage, Snowpipe supports all data types – including semi-structured types (check our previous post on file formats).

How to activate a pipe

Snowflake uses two methods for detecting staged files:

- Cloud Messaging: With this method, every time loading of new files on an external stage occurs, event notifications for Cloud Storage inform Snowflake about the arrival of new material. Then, the pipe queues this files and data get loaded into the target table in a continuous and serverless way. Specific parameters for the ingestion can be defined in the pipe object.

- Calling REST endpoints: Here, the client application calls a public REST endpoint and shares a pipe object name and a data filename list. If new files that match the list are found in the stage addressed by the pipe, they get added in the loading queue. At this point, the pipe proceeds operating as mentioned for the other method.

Features

The features that are supported by default on Snowflake for a Snowpipe are the following:

- Serverless Computing: Snowflake provides autonomously a virtual warehouse to run the pipeline at the moment new data is available.

- Single Queue Data Loading: For every pipe object, Snowflake creates single queues for the sequencing of waiting data.

- Data Duplication: It prevents the same files from being loaded twice and data duplication in tables, and does not load files with the same name even if they are modified later (check stages)

Concerning creation and management of pipes, Snowflake offers a list of possible DDL commands, such as:

- CREATE PIPE

- DESCRIBE PIPE

- ALTER PIPE

- DROP PIPE

- SHOW PIPES

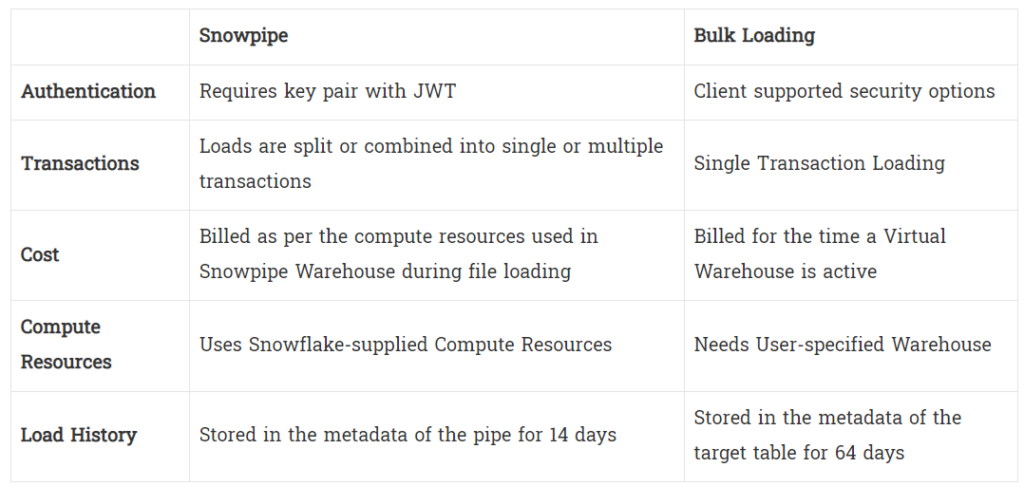

Differences between Snowpipe and Bulk Loading

Here briefly the differences between Snowpipes and Bulk Loading:

How to recall them

If it is an external stage (AW3, GCS, Azure) a pipe can be set to always read through it and automatically move the new data into the desired tables as soon as changes in the files are detected (read: Cloud Messaging).

If it is an internal stage, (only table or named – it doesn’t work for user stages) the pipe cannot be set to do the same, but must be triggered. This can be done by calling the REST API for Snowpipe everytime we know the content of the stage has been altered (read: Calling REST endpoints).

Alternatively, if the changes in the files do not happen randomly but on a time basis and no pipe can be set, a task (which is a Snowflake iterative statement that activates if a specific condition is verified), can be written to automatically load from the stage (check this blog!).

Conclusion

We have talked about pipes, showing both what they are and how to set them in place.

We hope you have found this series of articles on how to stage on (and from) Snowflake useful!

Have questions about data? Be sure to check our blog.