In my last blog, I briefly described Dagster.

In data engineering, effective orchestration tools are essential for managing increasingly complex data workflows. Among the most popular solutions are Apache Airflow and Dagster.

While Airflow has been the go-to standard for years, Dagster offers a modern, asset-first approach tailored to today’s fast-paced data environments. This post compares these tools to help you decide which is best suited for your team’s needs.

A Tale of Two Tools

Apache Airflow: The Industry Standard

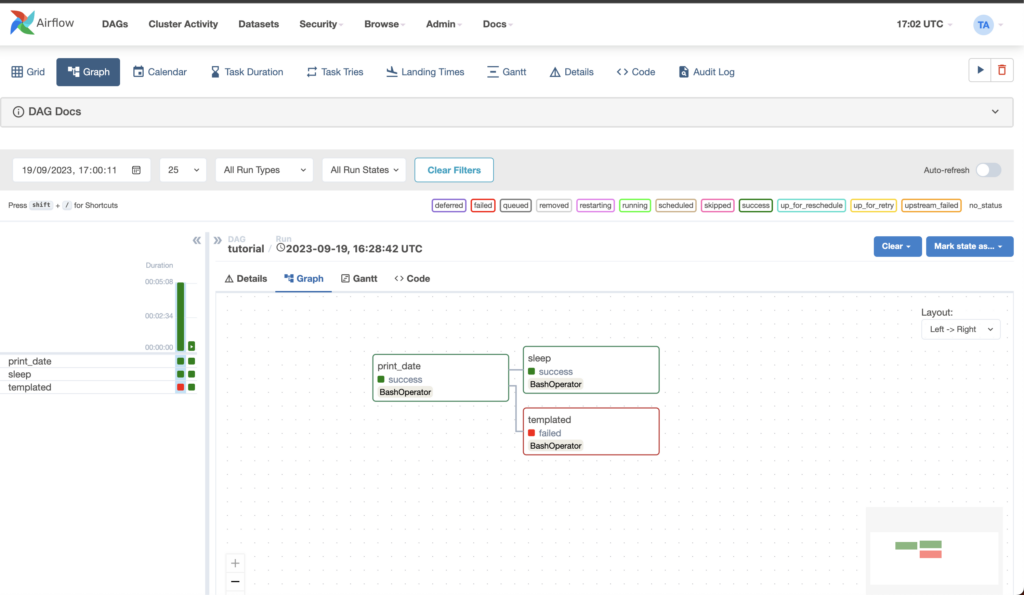

First released by Airbnb in 2014 and later open-sourced in 2015, Apache Airflow has become the most widely adopted data orchestration tool. Its task-centric model organizes workflows as Directed Acyclic Graphs (DAGs), offering unparalleled flexibility and extensibility. With thousands of plugins, robust scheduling options, and a vast community, Airflow continues to dominate the orchestration landscape.

Dagster: The Asset-First Newcomer

Dagster, launched in 2019, introduces an asset-centric approach to orchestration, focusing on the data produced by workflows rather than the tasks themselves. By emphasizing Software Defined Assets (SDAs) and robust testing, Dagster aims to address many of Airflow’s shortcomings, such as data lineage tracking, debugging, and testing capabilities.

Dagster’s DAG. Source: Dagster Documentation

Key Differences Between Airflow and Dagster

| Feature/Aspect | Apache Airflow | Dagster |

|---|---|---|

| Pipeline Concept | Task-based DAGs | Asset-based workflows with “ops” representing steps |

| Data Lineage | Experimental with task-level inputs/outputs | Native asset-level lineage, offering granular insights |

| Local Development | Requires a database, web server, and scheduler | Streamlined with CLI command dagster dev |

| Testing & Debugging | Limited testing; challenging to replicate production conditions | Built-in testing with type validation for inputs/outputs |

| Extensibility | Over 1,600 plugins and support for custom operators | Fewer plugins but strong integration with Python workflows |

| Scalability | Scales with Kubernetes and Celery executors | Built-in support for Kubernetes; efficient parallelism with asset-based execution |

| Community Support | Mature, with thousands of contributors and extensive resources | Growing, with an active open-source community |

Why Teams Are Switching to Dagster

Many organizations are transitioning from Airflow to Dagster for several reasons:

- Asset-Centric Workflows: Dagster’s focus on data assets simplifies dependency tracking and improves visibility, especially in complex workflows.

- Robust Testing: With built-in support for unit testing and CI/CD, Dagster allows developers to catch errors early, reducing risks in production.

- Cloud-Native Infrastructure: Designed for modern data stacks, Dagster seamlessly integrates with Kubernetes, Docker, and other cloud-based tools.

- Improved Debugging: Dagster’s structured logs and intuitive UI make identifying and resolving pipeline issues faster and easier.

When to Choose Airflow vs. Dagster

Choose Airflow if:

- You need a mature, trusted tool with extensive community support.

- Your team relies on highly custom workflows or requires integrations with legacy systems.

- Your workflows involve complex dependencies, such as dynamic task generation or branching.

Choose Dagster if:

- You prioritize data quality, lineage, and visibility across workflows.

- Your team values rapid development, testing, and deployment cycles.

- You work with modern tools like dbt or Spark and need seamless integration with a cloud-native ecosystem.

Real-World Use Cases

Airflow Success Stories

- Airbnb: Automates ETL processes, integrating multiple data sources.

- Robinhood: Orchestrates large-scale financial data pipelines for real-time trading and reporting.

Dagster Success Stories

- BenchSci: Improved visibility and debugging capabilities, accelerating development cycles. Source

- SimpliSafe: Streamlined data workflows, enhancing pipeline reliability and operational efficiency.

Final Thoughts: Choosing the Right Tool for Your Needs

Both Apache Airflow and Dagster are powerful orchestration tools, but their strengths cater to different needs. Airflow’s maturity and extensive ecosystem make it ideal for well-established teams with complex, task-oriented workflows. Meanwhile, Dagster’s modern, asset-based design is perfect for fast-moving teams seeking better testing, debugging, and data lineage capabilities.

Ultimately, the right choice depends on your organization’s workflows, goals, and the skills of your data team. Whether you choose Airflow’s proven reliability or Dagster’s innovative approach, investing in the right orchestration tool will set your data operations up for success.

Photo by Arindam Mahanta on Unsplash